center line extraction

Published:

图像中心线提取

一种中心线提取方法

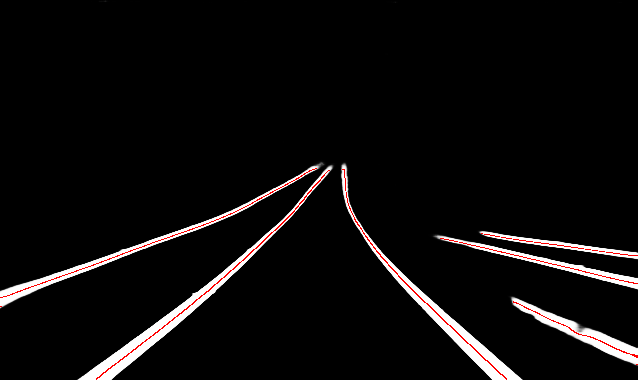

最近偶然看到了一种图像上的中心线段提取方法,其提取结果如下图所示:

算法为基于hessian矩阵的Steger方法,其在CPU上的实现代码如下所示: 方法的大致流程包括高斯滤波,一阶偏导,二阶偏导,求解hessian矩阵的最大特征值对应的特征向量,最终将满足条件的图像像素放入中心线像素集合

// lane center extraction func

// Steger C. An unbiased detector of curvilinear structures. IEEE Trans Pattern Anal Mach Intell[J].

// IEEE Transactions on Pattern Analysis & Machine Intelligence, 1998, 20(2):113-125.

// https://blog.csdn.net/Dangkie/article/details/78996761

cv::Mat StegerLine(const cv::Mat image) {

cv::Mat img;

//高斯滤波

cv::GaussianBlur(image, img, cv::Size(31, 11), 5, 2);

//一阶偏导数

cv::Mat m1, m2;

m1 = (cv::Mat_<float>(1, 2) << 1 ,-1); //x偏导

m2 = (cv::Mat_<float>(2, 1) << 1 ,-1); //y偏导

cv::Mat dx, dy;

filter2D(img, dx, CV_32FC1, m1);

filter2D(img, dy, CV_32FC1, m2);

//二阶偏导数

cv::Mat m3, m4, m5;

m3 = (cv::Mat_<float>(1, 3) << 1, -2, 1); //二阶x偏导

m4 = (cv::Mat_<float>(3, 1) << 1, -2, 1); //二阶y偏导

m5 = (cv::Mat_<float>(2, 2) << 1, -1, -1, 1); //二阶xy偏导

cv::Mat dxx, dyy, dxy;

filter2D(img, dxx, CV_32FC1, m3);

filter2D(img, dyy, CV_32FC1, m4);

filter2D(img, dxy, CV_32FC1, m5);

//hessian矩阵

std::vector<double> Pt;

for (int i = 0; i < img.cols; i++) {

for (int j = 0; j < img.rows; j++) {

if (image.at<float>(j, i) > 200 / 255.0) {

cv::Mat hessian(2, 2, CV_32FC1);

hessian.at<float>(0, 0) = dxx.at<float>(j, i);

hessian.at<float>(0, 1) = dxy.at<float>(j, i);

hessian.at<float>(1, 0) = dxy.at<float>(j, i);

hessian.at<float>(1, 1) = dyy.at<float>(j, i);

cv::Mat eValue;

cv::Mat eVectors;

eigen(hessian, eValue, eVectors);

double nx, ny;

if (fabs(eValue.at<float>(0, 0)) >= fabs(eValue.at<float>(1, 0))) //求特征值最大时对应的特征向量

{

nx = eVectors.at<float>(0, 0);

ny = eVectors.at<float>(0, 1);

} else {

nx = eVectors.at<float>(1, 0);

ny = eVectors.at<float>(1, 1);

}

// std::cout<< "row, col, a, b, c, nx, ny: " << j << " "

// << i << " " << dxx.at<float>(j, i)<< " "

// << dxy.at<float>(j, i) << " " << dyy.at<float>(j, i)

// << " " << nx <<" " << ny <<" " << std::endl;

double t = -(nx * dx.at<float>(j, i) + ny * dy.at<float>(j, i)) /

(nx * nx * dxx.at<float>(j, i) + 2 * nx * ny * dxy.at<float>(j, i) +

ny * ny * dyy.at<float>(j, i));

if (fabs(t * nx) <= 0.5 && fabs(t * ny) <= 0.5) {

Pt.push_back(i);

Pt.push_back(j);

}

}

}

}

cv::Mat center_line = cv::Mat::zeros(image.rows, image.cols, CV_32FC1);

for (int k = 0; k < static_cast<int>(Pt.size() / 2); k++) {

int i = Pt[2 * k + 0];

int j = Pt[2 * k + 1];

center_line.ptr<float>(j)[i] = 1.0;

}

return center_line;

}

上述方法在CPU上的执行速度较低,将其代码实现转为在GPU上进行执行,主要分为三个步骤:

- void GaussianBlur();

- void ComputeDeriv();

- void ComputeHessian();

其中第一步高斯滤波可以在global_memory上执行,也可以把filter kernel的参数放到constant memory上以便加快内存访问效率,也可以用shared_memory的方式来进一步加快卷积运算,同时,二维高斯滤波是一种可分离滤波器,可以分解为一维row filter和col filter的组合,这样的实现在nvidia cuda官方的sample代码中已有实现,如果你已经在机器上安装了cuda的化,其位于”/usr/local/cuda/samples/3_Imaging/convolutionSeparable”.

这里先给出利用constant memory和global memory进行卷积运算的代码:

首先需要先生成Gaussian Kernel:

void GaussianMatrix2d(float *array, int radius_w, int radius_h,

float sigma_x_square, float sigma_y_square) {

int kernel_size_w = radius_w * 2 + 1;

float normalizationFactor = 0.0;

for (int i = -radius_w; i <= radius_w; i++) {

for (int j = -radius_h; j <= radius_h; j++) {

array[(j + radius_h) * kernel_size_w + i + radius_w] =

exp((-1.0 * i * i) / (2 * sigma_x_square) +

(-1.0 * j * j) / (2 * sigma_y_square));

normalizationFactor +=

array[(j + radius_h) * kernel_size_w + i + radius_w];

}

}

// Normalize, since the Gaussian is truncated, we would like to integrate to 1

for (int i = -radius_w; i <= radius_w; i++) {

for (int j = -radius_h; j <= radius_h; j++) {

array[(j + radius_h) * kernel_size_w + i + radius_w] /=

normalizationFactor;

}

}

}

const int kernel_w = 31;

const int kernel_h = 11;

__device__ __constant__ float d_cFilterKernel[kernel_w * kernel_h];

void setConvolutionKernel(float *h_Kernel)

{

cudaMemcpyToSymbol(d_cFilterKernel, h_Kernel, kernel_w * kernel_h * sizeof(float),

0, cudaMemcpyHostToDevice);

}

__global__ void GaussianBlurKernel_Constant(float* src, float* dst,

int width, int height) {

int col = blockIdx.x * blockDim.x + threadIdx.x;

int row = blockIdx.y * blockDim.y + threadIdx.y;

const int kernel_radius_w = (kernel_w - 1) / 2;

const int kernel_radius_h = (kernel_h - 1) / 2;

if(col < width && row < height) {

float convolved = 0.f; // temp variable for result

int locX, locY; // Local x and y

// Main loop. Iterates over all pixels in its domain, and then convolves the

// Gaussian matrix with the submatrix around the pixel.

for (int off_y = -kernel_radius_h ; off_y <= kernel_radius_h; off_y++)

{

for (int off_x = -kernel_radius_w; off_x <= kernel_radius_w; off_x++)

{

locX = min ( width-1, max(0, col + off_x));

locY = min ( height-1,max(0, row + off_y));

//convolved += src[locY* width + locX] *

// device_gaussian[(off_y + kernel_radius_h) * kernel_size_w + kernel_radius_w + off_x];

convolved += src[locY * width + locX] *

d_cFilterKernel[(off_y + kernel_radius_h) * kernel_w + off_x + kernel_radius_w];

//printf("%f ", d_cFilterKernel[(off_y + kernel_radius_h) * kernel_w + off_x + kernel_radius_w]);

}

}

dst[row * width + col] = min(convolved, 1.0);

}

}

对于第二步图像一阶微分和二阶微分的计算,由于滤波kernel比较简单, 直接采用global memory 进行计算:

__global__ void Filter2D_dxy_kernel(float* src, float* dst, int width, int height) {

// dxy kernel

// m5 = (cv::Mat_<float>(2, 2) << 1, -1, -1, 1); //二阶xy偏导

int col = threadIdx.x + blockIdx.x * blockDim.x;

int row = threadIdx.y + blockIdx.y * blockDim.y;

if(col < (width - 1) && row < (height - 1)) {

float value = src[row * width + col] -

src[row * width + col + 1] -

src[(row + 1) * width + col] +

src[(row + 1) * width + col + 1];

dst[row * width + col] = value;

}

}

第三步需要进行二维矩阵的特征值和特征向量计算,这里直接给出实现代码:

__global__ void ComputeHessianKernel(float* src, float* dx, float* dy,

float* dxx, float* dyy, float* dxy,

float* result,

int width, int height) {

int col = threadIdx.x + blockIdx.x * blockDim.x;

int row = threadIdx.y + blockIdx.y * blockDim.y;

if(col < width && row < height && src[row * width + col] > 0.75) {

// cal eigen vector and eigen value

/*

* a b

* b c

*/

float a = dxx[row * width + col];

float b = dxy[row * width + col];

float c = dyy[row * width + col];

//求特征值最大时对应的特征向量

float lambda1 = (a + c + sqrt((a - c) * (a - c) + 4 * b * b)) / 2.0;

float lambda2 = (a + c - sqrt((a - c) * (a - c) + 4 * b * b)) / 2.0;

double nx, ny;

if(fabs(b) < 1e-15) {

if(fabs(lambda1) >= fabs(lambda2)) {

nx = 1.0;

ny = 0.0;

} else {

nx = 0.0;

ny = 1.0;

}

} else {

if(fabs(lambda1) >= fabs(lambda2)) {

float tmp_nx = 1.0;

float tmp_ny = (lambda1 - a) / b;

nx = tmp_nx / sqrt(1.0 + tmp_ny * tmp_ny);

ny = tmp_ny / sqrt(1.0 + tmp_ny * tmp_ny);

} else {

float tmp_nx = 1.0;

float tmp_ny = (lambda2 - a) / b;

nx = tmp_nx / sqrt(1.0 + tmp_ny * tmp_ny);

ny = tmp_ny / sqrt(1.0 + tmp_ny * tmp_ny);

}

}

// if(row == 240 && col == 639) {

// printf("a, b, c, nx, ny: %f, %f, %f, %f, %f\n", a, b, c, nx, ny);

// }

double t = -(nx * dx[row * width + col] + ny * dy[row * width + col]) /

(nx * nx * dxx[row * width + col] + 2 * nx * ny * dxy[row * width + col] +

ny * ny * dyy[row * width + col]);

if(fabs(t * nx) <= 0.5 && fabs(t * ny) <= 0.5) {

result[row * width + col] = 1.0;

}

}

}

经过上面的优化,可以达到约7-8倍的速度提升,同时中心线提取的效果与原有的一致